Back in 2017 the crypto market was super hot and there were lots of opportunities to make money.

One of the ways that I made money at the time was by running a trading bot. The bot wasn’t designed to make directional bets on the market, it would try to find places where there was a mis-pricing across different exchanges and make money “risk free”.

For example, lets say that on Kraken Bitcoin was $1240 and on Coinbase it was $1245 —yes these were the prices back then. If you sold Bitcoin on Coinbase and bought it on Kraken you could make $5 —this assumes you had USD / Bitcoin in the right spot which is a separate problem.

The bot ran thousands of these trades and made a steady stream of cash and an accounting nightmare when it came time to file taxes.

Well…I should say that it made a lot of money until it lost a lot of money.

Sometimes you would buy on Kraken for whatever reason you couldn’t sell instantly on Coinbase leaving you long more Bitcoin then you wanted, then the market would go down 5% and wash out all of the gains you made that day. Or sometimes Eth would get stuck mid transit because CryptoKitties trades were clogging up the network. Or sometimes you would be trying to run a USDT arbitrage only to find out that Tether isn’t able to wire the money to you because they lost their bank account.

No matter the reason there was usually some crazy event that would come along and wipe out gains. So it turned out that running these little games was really just picking up pennies in front of a steamroller. This is another way of saying that you are taking bets that are asymmetric the wrong way —little gain, lots of risk of loss. Sure you can make money doing it, until you trip and get rolled over by the machine.

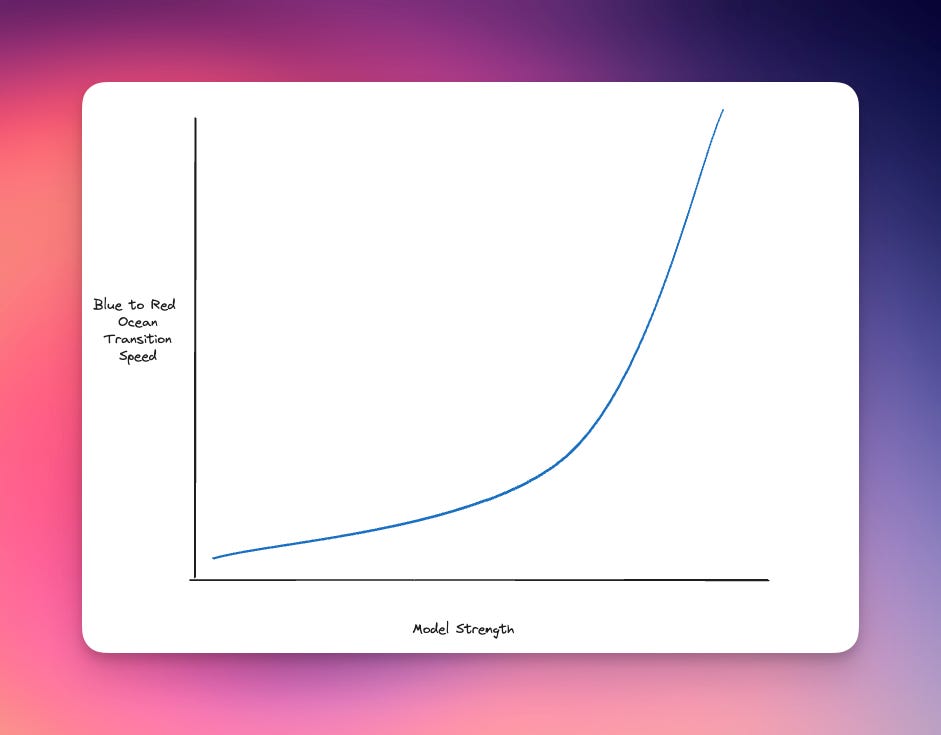

This is happening in AI right now. Companies are releasing products and getting real revenue traction only to have OpenAI or Anthropic or Google or Microsoft or one of the ten thousand other companies bring to market a similar value prop. When this happens the once blue waters a startup was operating in become instantly red and the ability to create durable long term value is in question.

LLMs have reduced the cost and cycle time of software development substantially. This is the main reason that blue oceans can turn into red oceans faster then ever before. As AI systems get stronger the speed at which the oceans turn red is only going to accelerate.

So maybe its better to leave the pennies be and find valuable things to build deeper in the stack where the cost to create value is higher. I have lots of thoughts here but I will leave those to future posts.

🤖 AI

A friend sent me an old post from the MIT Tech Review that made the claim "Making an image with generative AI uses as much energy as charging your phone".

Initially I thought this was a clickbait article but I spent some time with o1, the article and some data to realize that it may not be that far off. The iPhone Pro Max has a 17.10 watt hour capacity. I run a 1000 watt power supply on the server in my home lab. At peak the upper bound of power consumption is 16.7 watts per minute --basically 1 Pro Max. Assuming it takes 1 minute at best to generate an image and I’m drawing all of the energy from my power supply to create the image this “one cellphone energy per image” claim is somewhat accurate.

One thing from this is clear. The world doesn’t have enough power for the current models to work at scale. To fix this, we could:

Scale the amount of power that can be supplied —see the Microsoft nuke plant deal

Make models efficient —this is happening

Use smaller / more specialized models and run them on lower energy hardware (ASIC)

1 requires a long timeline because building things in meat space is hard. 2 requires more science and thus is less predictable. 3 is probably the most viable option in the short run. Which is probably why Etched has raised so much money. I would love to find a way to get exposure to this space.

📊 Data

🔗 Cool *hit

The Genie 2 model out of DeepMind lets you create generative worlds. Gaming is the natural first thing to think about here but the other (I think more impactful) application is training. Not only training agents but also training people.

Anthropic released Model Context Protocol which lets developers build more composable infrastructure. This is good because now companies can start to build i/o services around internal tools that they want agents / LLMs to work with.

Human Layer makes it easy to create human in the loop agent workflows.

Scrapybara is letting people create remote desktops for agents. The previous remote desktop market was bound by the number of people in the world with access to the internet. The remote desktops for agents market will be much larger then the market for desktops for humans. This makes me think: what will the world look like when there are more agents doing things online then humans?

🔈What I'm listing to

Gwern Branwen's Dwarkesh interview Gwern’s view is that AGI systems are very close (years) so it’s probably a waste of time to work on hard problems. He says unless they are fun to work on, its probably better to just write the problems down and in a few years give them to AI to solve.

Kaskade Christmas Volume 2 because electronic music + Christmas = a great vibe.