DISALLOW

My bot's experience trying to do a mundane task

I have a GPT that I use to help me understand more about customers for a product in my portfolio. The GPT visits company websites, collects information, and summarizes it for me so that I can understand the customer better.

This morning I was using the GPT to analyze some of the new customers and I came across an issue.

The GPT said that it couldn’t help with one of the customers (Rappi) due to a “restriction” and it fell back on information that was encoded in the model. Here is a screenshot of the response.

After some digging, I found that the Robots.txt for Rappi’s website is disallowing GPTBot. This setting prevents Rappi from being crawled by OpenAI.

Rappi is probably just trying to keep OpenAI from crawling its site for training data but what ended up happening is the site is now not useable by the GPT at all. This limits the site's usefulness in ways that the Rappi team probably doesn’t intend more broadly.

For example, maybe you want to build a GPT that uses Rappi to help you and your family find a restaurant you all will like? You can’t do it without building custom tools.

Or maybe you want a GPT that makes a personalized recommendation for you from the Boicot Cafe menu on Rappi? You are out of luck.

More importantly, once you have an OpenAI GPT-o5 based personal assistant that is managing your diet it won’t be able to order that optimal meal for you from one of Rappi’s restaurants.

These are just a few ideas that I came up with. But one of the fundamental issues here is that Rappi is invisible to OpenAI’s models. By this setting they are implicitly saying that their user interface and recommender is superior to OpenAI’s ability to find the information the user wants. Which it may be.

For now.

But what about the future?

The information organizers

When the internet came out, pamphlets/magazines/etc became much less relevant. Thus if your business’s primary way of communicating was via a magazine or print newspaper you needed to retool and have a website.

In a future where more people interact with LLMs, "websites" or “apps” as we see them, could follow the pamphlet to the grave.

Yes I know the argument. “Not all user interfaces can be text based. Booking a trip to Paris using text only would be a nightmare….” But strong AI can just build “sandcastles” (small disposable applications) to allow for you to interact with data similar to how you use apps today.

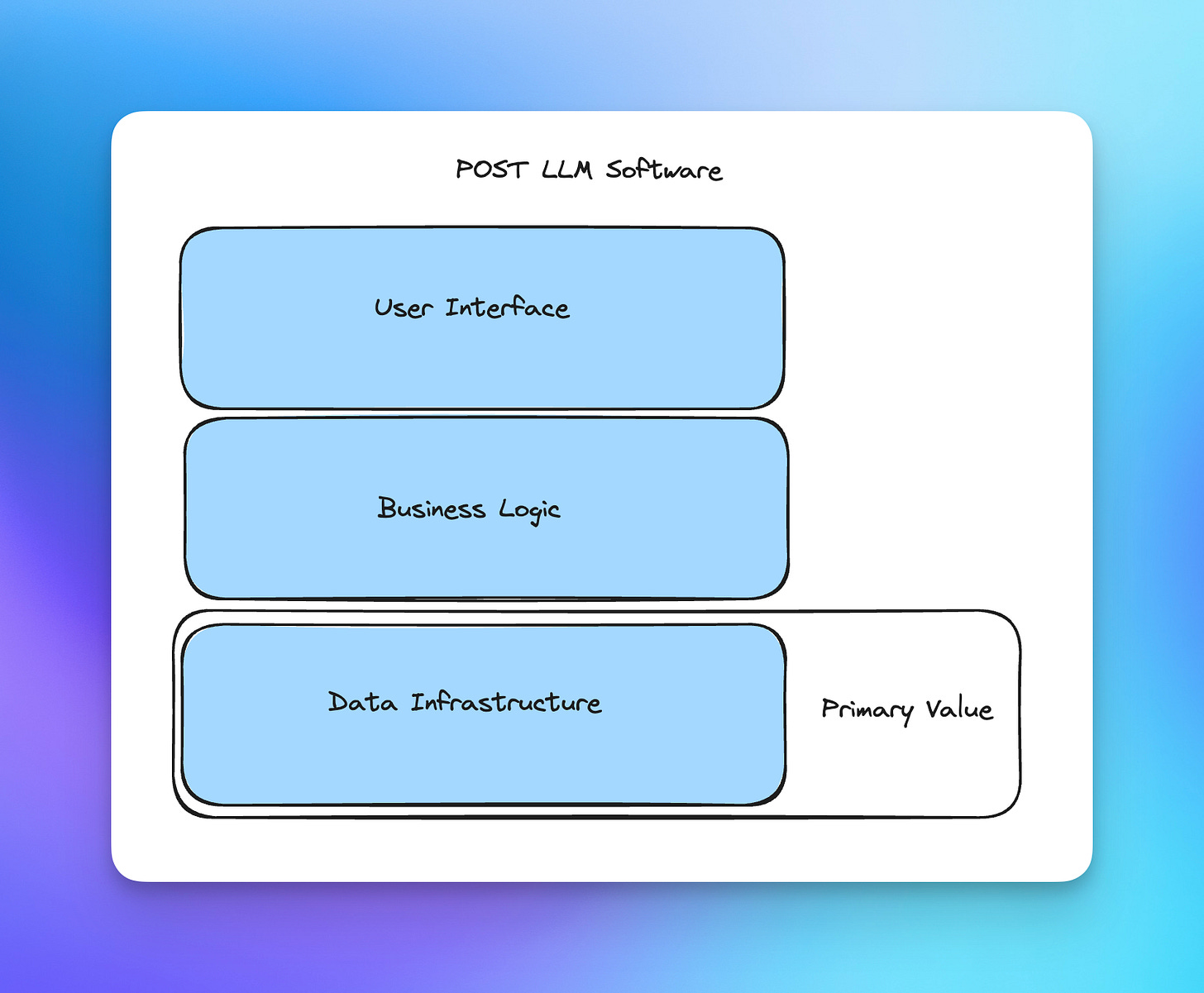

But when you remove the UI from something like Rappi what is left?

As the music industry transitioned from the record to cassette to the CD to the MP3 the core value (Music) didn’t change. The only thing that changed is its user interface. It turned out that this whole time, music was just information organized in a way that humans love to process audibly. Over time the market has been trying to find the easiest way to package and deliver music for human consumption.

Similar to the music industry, the information technology industry was built based on the idea that the market needed to find the ideal way to process information about the real world for human consumption. The manifestation of this idea since the 1980’s is what we call “software”.

The value created (and captured) by any software company is by being able to organize certain information and put it into a user interface that is easy for humans to interact with and Infrastructure is an afterthought that only really needs to be addressed at scale.

The entire tech industry was built on this premise.

For example:

AirBnb: an organization of all of the condos in Paris that are vacant so that you can rent one while you are there.

Salesforce: an organization of your accounts so that you can track who your top accounts were last quarter and how much they are likely to spend this quarter.

But what happens when we have stronger AI?

As AI gets stronger the interface and business logic matter much less since all of that can be handled through the chat/voice interface or sandcastles.

Stronger AI can just look through a list of Parisian condos and interact with the owners (or the owner’s agent) to understand if they are vacant or not for you.

Stronger AI can read all of your contracts last quarter, read all of the sales emails, and all sales call transcripts to help you understand what your clients may spend.

This means that the valuable part of what something like Rappi does ends up being the act of going out and “getting” the information that needs to be represented.

If this is how things pan out, it takes infrastructure from being an “after thought” in the creation of software companies, to the primary thing that the software companies should be focused on.

Bottom line: if agents take hold the way that we all think they will, much of the internet will need to be retooled to be oriented more towards aggregating information for model consumption. Future software companies will need to look more like databases or infrastructure providers rather than the apps we know and love today.

This puts existing “user centric” companies like Rappi in an interesting position.

They will want to keep their users by restricting AI’s access. But as they do this they could become less relevant since there is a good chance that the user centricity is no longer important.

User centric information organizers likely lack the alignment, metrics, and capability internally to become a model centric information organizer. I wonder who will be stepping in to fill the void or if some companies will be able to recognize this earlier and adapt.

💡Steal this idea

Most non programmers don’t realize that any given piece of software is really just a bunch of smaller pieces of software chained together.

At the heart of all of this are “package managers” like APT, Pacman, NPM, Pip 🤮 etc. These programs allow users to download programs to their systems to be used for programming tasks or every day computer use.

The best part of package managers is the ability to find the thing you need and easily be able to install it.

So far, “tool use” is the core idea that allows for language models / agents to be useful when completing tasks. Tool use just allows the model to execute some prewritten code on your machine. For example, an agent may use a tool that checks your inbox for new messages, then use another tool that plays new mail notification to let you know you have a new message.

Tool use workflows like this is easy until you need to do something more complex. For example, the MailMentor agent loads a headless browser (with a proxy), executes dozens of searches, and looks through websites to accomplish its goal. To get all of this to work was a real pain and requires a lot of custom infrastructure.

I wish there was a way to develop my tools in a separate system.

I then want to give my agent a single tool that lets it search through all of the available tools (like APT) and the agent could then select the best tool for the job and execute the use of the tool on a separate runtime via an API.

Someone please build this so that I don’t need to.

📊 Data

🤖 AI

OpenAI’s o3 was announced last week and blew everyone’s mind with its score on the ARC AGI benchmarks.

Hume’s Empathetic Voice Interface allows you to create custom AI voices by adjusting inputs.

I’ve started to notice more “AI Solutions Architect” jobs pop up over the last few months. These positions look like a combo of AI orented staff engineers and product managers.

🔗 Cool *hit

Google has a pretty cool “paper phone” experiment. This is a piece of paper that you print out that mimics some of the cellphone’s functionality so you can be more present.

Enron is relaunching. Nuff said.

RoboCasa lets you build simulated environments for robotics projects

🔈What I'm listing to

Mossad ran an online marketing campaign to sell Hezbollah the explosive pagers.

Huberman talks about how our brains maintain neural plasticity as we age.

📚 What I'm reading

Alex Perelman writes about his difficult process of self examination and reminds us that “you are the creator of your life”.

Xuan is pretty upset about existence of o3